Building an Artificial Improvisor

Table of Contents

In June 2015, I started a journey to develop an Artificial Intelligence that I could perform improvisational theatre with. April 8, 2016, my dream becomes a reality.

Background #

As you may or may not know, I am an improvisor, and a Ph.D. student in Computer Science, studying artificial intelligence. Early on in my improv career a very, very good improvisor told me:

A good improvisor looks great on stage. The best improvisors make everyone else look good.

This was advice that I have held closely through my artistic journeys. Lee White (twitter, wikipedia), a talented Canadian performer (and my improv Uncle) told me about a show where he selected from the audience the individual with the least stage / public speaking experience and then did a show with them. I was up for the challenge and pitched the show to then Rapid Fire Theatre’s Artistic Director Amy Shostak. She fostered the idea (as all great improvisors do) and I performed the show multiple times to great success.

If I could do improvisation with anyone in the audience, could I improvise with anything? (this reminds my of another mentor and early guide Jacob Banigan (twitter), and his work in solo improvisation).

Some more cool background research that I found and followed up on:

- Shimon, an improvising robotic marimba player that is designed to create meaningful and inspiring musical interactions with humans, leading to novel musical experiences and outcomes. from Gil Weinberg et al.

- Digital Improvisational Theatre: Party Quirks - from Brian Magerko et al.

- Robot Improv: Using Drama to Create Believable Agents - from Allison Bruce et al.

The Journey to AI (artificial improvisation) #

Improvaganza 2015 #

It was at Improvaganza 2015 that the idea of building an artificial intelligence came to be. Sitting at the pub with friend-from-across-the-pond Adam Meggido, who just recently won an Olivier (like a TONY for Brits) for Showstopper on the West End of London.

Adam told me to embrace my scientific side, he challenged me to bring the best in artificial improvisational technology to the stage. Most importantly, he told me in no uncertain terms:

The first time will be the worst time, but the science is getting better every year – every month even. So, just start!

Adam was a great initial sounding board, he helped me wrap my ideas around the basic foundations of why this kind of project would happen.

How and why science and art intersect? #

Bringing AI to the stage is fascinating, but for me it was due to three main points:

- Science broadens our artistic understanding;

- Art broadens our scientific curiosity; and

- Both can be used to explore and understand humanity.

Deep, I know, but it is in these points that I found my main inspiration.

Science broadens Art #

The worlds of art and science are intrinsically linked. We, as artists, grow experimentally with every performance. Similarly, we as scientists, are imbued with creativity in hypothesizing. The combination of technology and storytelling is not the novel convention. Differently stories are told through different means. The medium is the message (as Marshall McLuhan says). The way that we tell the story, symbolically connects to the messages being portrayed in the contained narrative.

We are connecting the minds of the audience with the performers, sharing their awareness of the performance live and in real-time with the performance as it is happening. By allowing the transparency between the story-teller and the audience, we can collaborate the share in the experience. Our art will push our technology, but our technology should push our art as well. As audiences evolve they want to be challenged, and we should not be afraid to experiment with the newest and most powerful tools to capture that excitement.

Art Broadens Science #

Creatives are paving a road of technological experimentation. By fusing the worlds of natural and artificial we can start to see the links between the sentient and the synthetic. We are using a means that is appreciated, understandable and approachable in performance theatre, to share knowledge of advancing technology.

Artificial Intelligence is a tool, this is a specific implementation of that tool. It is the medium with which we can tell these stories. Emotional Artificial Intelligence (that is, one that can portray empathy or sympathy or compassion) is somewhat abstract and unappreciated in the scientific literature. By creating these links we are able to somehow add dimension to the discussion.

Using Both to Understand Humanity #

What is it to be human? What are basic human values? Is morality relative? These are all extremely difficult questions to think about, let alone to start to answer. Perhaps, the combination of the human and the machine, raw and live in front of an audience which shaping the experience, can help to understand how to approach answers.

Can a robot perform a Shakespearian monologue? Can a robot act? Can a synthetic voice perform all the parts in a play? Can the robot be the actor and the director and the audience? Where does the human NEED to come into a theatre piece, if at all? Theatre helps us to understand the human condition, but perhaps it could help us to understand the human as well.

Performance Ideas Pt. 1 #

The ‘Adam’ discussions (the creationist metaphor is not lost on me) also lead to two interesting ideas for the performance:

- A potential branching cue structure where I can cue the booth to play the next sound cues, which could be the voice of the AI. Rather than the deterministically selected cue, it could choose randomly from a set. This selection could also be biased from choices it has (or I have) made in the past.

- The idea of the AI bring a voice that convinces of how real it is by physically embodying an audience member. Perhaps the voice helps select someone from the audience and they then are the physical embodiment of the voice.

January 2016 #

It was deep in the cold Edmonton winter when the Reinforcement Learning and Artificial Intelligence lab at the University of Alberta was visited by Dr. Michael Littman. A renowned AI scientist, who made time to sit down and chat with me about research ideas and … creative projects. While at I was at first hesitant to share some of my more out-there ideas, Dr. Littman embraced the ideas wholeheartedly. He imbues the sense of “Yes, and”, a notable quality for an academic.

Dr. Littman was thrilled with the idea of the show, and connected me to a fiend of his named Tom Sgouros. While I didn’t realize it at the time, this was one of the critical connections between the art and the science.

Tom Sgouros and JUDY #

In Spring 2005 (yes, that is the correct date, I know it seems like a long time ago for what I am about to tell you, so see the date on the headline of this article), Tom performed alongside a robot that he built named Judy. Tom explored the question:

IF YOU BUILD A ROBOT SMART ENOUGH TO DO THE DISHES, WILL IT ALSO BE SMART ENOUGH TO FIND THE TASK BORING? FIND OUT IN THIS EXCLUSIVE INTERVIEW WITH A ROBOT.

Needless to say, this was utterly inspiring. Tom told me about his history of performance, with so many inspiring shows (and creative uses of VCRs, fake tapes, and cue buttons intelligently hidden around the stage) .

Performance Ideas Pt. 2 #

I told Tom about the show I was imagining:

- The show is somewhere in between Her, Pygmalion and tomorrow’s tomorrow. It exists in a world where humans and robots can have relationships. It is, in that sense, some what futurism. It provides some commentary on the judgement of others on the choices we make. I had the idea of using the robot as an introduction to the surrogate; can the robot convince an audience member to be the vessel, and at that point, it could be that the human is the intelligent being?

- This could show the tradeoff between the human and the machine. Plus then I would get to have a lovely, improvised scene, with someone from the audience, as they are ‘acting’, as if the AI gave them full control.

- Perhaps there is a place for audience interaction, or maybe the audience has some driving power over the intelligence, how can this feedback help to drive the show.

- Perhaps there is a natural language processing piece to incorporate, or a dynamic voice synthesis piece (as those are still very developmental).

The Hard Part #

With my background research done. It was time to start with the implementation

My name is Pyggy #

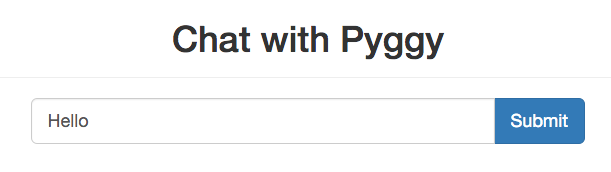

I wanted a robot that I could improvise with, but I was less focused on the hardware and more interested in the dialog engine. With improvisation listening is key, so I needed an intelligence that could hear me and speak. I started building Pyggy.

The name Pyggy comes from the name Pygmalion, and the fact that Pyggy is build in Python (see what I did there), it also stems from this haunting video:

Seemingly alone, Pygmalion sought to create for himself a perfect, pure, unsullied companion. He used his particular skills to this end: he created a statue bride.

What I was setting out to do was build a perfect improvising companion, and there are eternal dangers of seeking idealist ideas.

Pygmalion is also the name of a George Bernad Shaw play about an academic who makes a bet that he can train Cockney flower girl Eliza Doolittle to pass for a duchess. How fitting. Ps. if you haven’t yet, turn on My Fair Lady while you read the rest of this post. You won’t miss much, just the credits and some exposition.

The Technology #

It was less the fancy robotics that I wanted to focus on, in improv you have to play so many characters, so fleshing out a body isn’t the top priority. So, having made the decision to stick with the software implementation I started programming.

Pyggy is free and open-source if you want to try to get it running on your own system.

Behind Pyggy there are several modules:

- Pandorabots Chatbot

- Chatterbot learning Chatbot

- Speech recognition

- Speech synthesis

Speech Recognition and Synthesis #

Speech recognition is done by the python module of the same name. Pyggy uses the newly released Google Speech API (which was majorly updated during development, see Adam, technology won’t stop progressing, so it was right to jump in before it was ‘ready’).

Synthesis is done by the onboard speech text-to-speech system for the mac, but I did download some better sounding voices (for my ears, the best ones are Tom and Samantha).

Pandorabots Chatbot #

The Pandorabots chatbot playground is a great place to get your feet wet starting to play with chatbots. They have some great AIML libraries which serve as a nice structured foundation for their bots. As well, their API from chatbots.io makes it super easy to start your chatbot up and deploy it live.

But their training is quite difficult as it all relies on the mostly deterministic AIML. It made for some interesting conversations, but I couldn’t really train it deeply on conversational knowledge, and it worked best only when I was asking the ‘right’ questions.

Finally, it is not free and not open source.

Chatterbot Chatbot #

The Chatterbot Chatbot from Gunther Cox is free and open-source. Which is great because while it is a strong code base to start a project on, I found that I wanted to train it on huge datasets and it was quite slow.

I was able to fork the repository and modify things nice and quickly so my training could happen lightning quick. Maybe it will even make it into the main codebase!

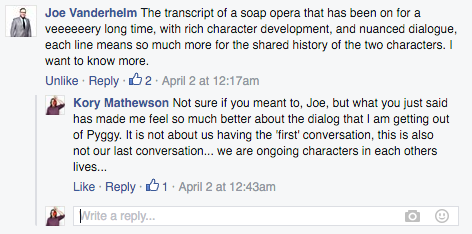

I was also able to deploy it quickly on a working training interface (which is currently not running, but as I wanted to freeze the training), so that I could enlist the help of my fellow improvisors to help seed and train the model.

The chatbot runs on a database (MongoDB) which is trained on a huge corpus (Cornell Movie-Dialogs Corpus) of conversations.

This corpus is gigantic (300k utterances from over 10k characters), due to the training speed limitations of chatterbot I had to settle for training on a random subset of 20000 conversations, and it still took an hour to train. For the next iteration, I want to use the full corpus.

It works by fuzzy matching my speech recognized input string with some strings in its dictionary and then producing the most likely response to that input.

Gluing it Together #

I combined the chatbots, to get the best of both worlds. The chatbot-like feel of the Pandora bot, and the deep conversational knowledge and interest of the chatterbot, and I got Pyggy.

Visualization #

As there will be an audience for the performance, and it will be taking place in a well-equipped theatre, I though that it would be best to find an interesting visualization for Pyggy.

A great recommendation at the 11th hour led me to build a nice face with talking animation using the Magic Music Visualizer and Soundflower to port the audio through.

Performance Ideas Pt. 3 - You’re On #

I have been so incredibly supported on my academic and creative artistic sides, it has been a great learning journey over the last year or so.

So, April 8 is the performance. I am performing alongside Pyggy in Rapid Fire Theatre’s Bonfire Festival. Thanks to the current Artistic Director Matt Schuurman for giving me the chance to do it, and pushing me when things were faltering.

I plan to wear a headset microphone, and project Pyggy on the screen, and have a scene with Pyggy. It sounds weird to say, but it is going to happen.

I will pray to the non-denominational demo gods that things don’t crash, I will break legs with the other actors on stage. It feels like my worlds of science and improvisation are colliding right in front of my eyes.

Or, your eyes, if you are going to be there… This time.

But like Adam says, the first time will be the worst time, because technology is only going to progress, Pyggy is only going to get better at conversing, and I will only get better at training Pyggy.

Thank You #

As I reflect on Pyggy, I am overwhelmed with the passion and enthusiasm of friends, colleagues, and collaborators. It was common for me to bring it up and for friends to quickly offer help and ideas on creation, so thank you to: Paul, Sarah, Matt, Joel, Brendan, Paul, Joe, Nikki, Leif, Julian, Paul, Lana, Stu… it is moments like this that helped me through the ’this is never going to work’ phase: