Text Message Chatbot built with Twilio, TensorFlow, Annoy, and Love

Table of Contents

Artificial improvisation is improvised theatre performed alongside intelligent machines. For a recent artificial improvisation show (Improbotics produced by Rapid Fire Theatre and HumanMachine), I developed multiple technical improvisation formats. In this post, I will tell you about the technical setup for one of the formats in particular.

Hi! I am JannJann.

I developed JannJannn to be a system which 1) audience members could chat with, and 2) the content of their interactions could be then used immediately in the performance.

I thought it might be interesting to keep notes on how I built that system and how all the pieces fit together. This project is built using open-source software. You could make your own!

Here is a news clip describing the show and the way we used JannJann:

Step 1: Accepting Inputs aka “Can I get a suggestion, please?” #

For audience members to interact, they would need a mode of interaction. At improv shows, audiences often yell out suggestions or write them on slips of paper. I wanted the audience to be able to privately interact, in a unique and intimate way. Text messages serve this purpose well. I have used SMS as a medium previously, in botrotica: building a sext bot.

For SMS interaction, I used Twilio. Twilio assigns a public phone number which can then be used to capture and reply to text messages and/or phone calls.

I first used Twilio for a Secret Santa gift I developed in 2012 (discussed briefly in this previous post). For that gift, I asked friends to send a private message, or call and leave a private voice message, with reasons why a mutual friend of ours is great. I collected hundreds of messages, and then programmatically displayed them on a webpage-built-for-one; that is, a private webpage that only the lucky gift recipient could view.

Twilio makes it easy to set up an SMS endpoint, and with that configured, and a public number set up, it was on to the next steps. The model which would take the inputs from the audience members, and respond with meaningful, chit-chat dialogue.

JannJann’s phone number presented during the performance.

Step 2: Responding Accordingly #

With hundreds of audience members sending multiple messages per minute, I needed a fast model which could respond relatively meaningfully. Time is of the essence because, during the show, the time when the audience could interact over text message was going to be limited to 60-90 seconds.

So, while neural networks in vogue, nearest neighbour approaches can respond much faster. So, I built a simple chatbot baseline system named Jann (just approximate nearest neighbour). Jann can use any input text you want. You might want to build a chatbot from your own Twitter history (follow me @korymath), or from your Grandmother’s recipe book. Anything you can think of, Jann can use as input. For the performance, I used a large database of human conversations, namely the Cornell Movie Dialog Corpus.

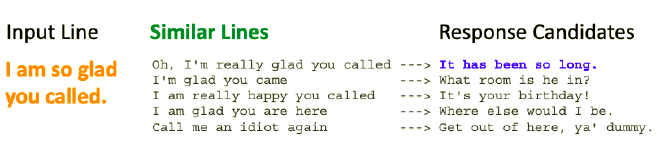

This is a fantastic dataset that is well formatted and easy to parse. To generate interesting and meaningful responses to the text messages from audience members, it follows a clever algorithm. First, find lines in the database which are most similar to the audience input line. Second, randomly return the response to one of these lines.

These responses may not be coherent over multiple steps. It is only trying to respond to the latest audience input. To ensure that the wires do not get crossed, each audience members is interacting with their “own” version of Jann.

Step 2.1: It’s a beautiful day in the approximate nearest neighbourhood… #

The “finding similar lines” step from the algorithm above is straightforward, though a little more technically in-depth. If you want to follow along with how Jann builds up a fast-access database of responses and then serves them to many users at once, check out the README and the source code.

First, a list of (input, output) pairs is collected into a large list. Then, each input is embedded into a semantic space using the Universal Sentence Encoder (paper, tfhub, tfjs). The encoder converts each sentence into a list of numbers (or vector), such that similar sentences are closer together than sentences which are not similar.

This list of embedding vectors (or array) is saved with a corresponding link to each (input, output) sentence pair. This correspondence is done using a unique identifier, or key. The embedding array is indexed using the fast Approximate Nearest Neighbour library from Spotify called Annoy. Indexing breaks up the embedding array into a collection of searchable sub-groups. This index saved to memory. It loads quickly and given an input vector, it can find similar vectors quickly. It is quick in large part because it is approximate. That is, it may not return the true nearest neighbour. This approximation is the trade-off made for speed.

Jann matching an input line and then providing a response.

Finally, Jann builds a callable endpoint by chaining the encoding and approximate nearest neighbour search operations. So, the algorithm for response generation is as follows:

- Given an input sentence

- Jann encodes the sentence into a vector

- Find the N most similar vectors to this vector

- Lookup the key corresponding to this vector

- Lookup the (input, output) lines corresponding to this key

- Randomly (or not-so randomly) select and return one of the output lines.

Step 2.2: You got served! #

Jann uses a Python-based Flask app for the backend. This allows for quick and easy use of the TensorFlow Hub module for the Universal Sentence Encoder. It also allows for a lightweight, robust web-server to be spun up quickly and easily using gunicorn. This allows for hundreds of requests per second to be handled by the system.

This configuration is rapidly deployable on any cloud service for a few dollars a month. I used Digital Ocean because I wanted the experience for another project I am working on. You will need to set up your cloud system to properly serve Jann, here are details on how to do just that.

Once you have the server endpoint running, you can follow the load testing scripts (using locust) located in the Jann repository.

Step 3: Interact with all the things! #

At this point, you have a Twilio input, and you have outputs which are ready to be sent back to the user. It would be relatively straightforward to create a direct link from your Twilio endpoint to the Jann model.

But, I wanted to be able to interact with Jann over more than just the Twilio input. I wanted to be able to use Twitter, Google Home, and a basic website as well. Luckily, there is a tool that enables all of these interactions in a few clicks, that is Google DialogFlow.

DialogFlow handles the pipes connecting inputs and outputs and can be used to serve all kinds of dialogue systems. It is designed, in large part, for domain-specific dialogue systems, and coding rules for conversation flow (hence the name, hint hint). That said, it is equally useful for chit-chat dialogue, by passing on the response generation and logic to an endpoint you want. For instance, you can point it to Jann, which at this point is running peacefully in the cloud.

Configuring your default response handler to be Jann endpoint passes inputs along, and handles the responses, regardless of the medium of interaction (e.g. Twilio, Google Home, Twitter, etc.)

Step 4: Break a servo! #

All the pieces were in place. All it took was the posting of a phone number on the screen alongside a countdown timer and the audience took it from there.

Note that the show happened four times, and each time there were hundreds of interactions in the short conversation window. It was exciting to watch out over the audience and see them interacting with the system. We could hear the snickers and giggles when Jann responding with something less-then-congruent to an audience members input.

As the inputs were streaming in, they were being captured to a temporary log file. Following the conversation window, we played the classic improvisational theatre game “Lines of Dialogue” with the lines of the audience as the lines to fill in the blank. In this game, random lines are interjected into the scene and the performers must justify the lines in the context of the scene.

We could sample randomly from the log with a single button press. The system would then take one of the lines, synthesize it (using Google WaveNet on Cloud Text-to-Speech), and vocalize it over the house speakers. The effect was such that as the scene progressed, every so often a performer would cue the random line, and it would be said by the AI’s voice. These random lines were delightful to the audience, for they were the creators. In these moments, we were writing the show together.

Step 5: Retrospeculative #

The number one rule of improvisation is to say “Yes, and...” Accept your scene partner’s offers and response with additional information.

The conversations with the audience members were interesting and provided for exciting random lines. They were also incoherent with the narrative progression. In my other work, I have aimed to build systems which can understand scene content and context and respond with more meaning.

The Artificial Language Experience (or A.L.Ex.) aims to do exactly this, and we used A.L.Ex. in other parts of the show. For more information on A.L.Ex. check out Improbotics and HumanMachine.

Jann does exactly what it sets out to do. It provides related responses as fast as possible, to as many individuals as possible, with control over the content so that we do not respond with anything lude, crude, or offensive.

I hope that this technical dive gives you some inspiration for the next technical performance system you build. If you want, try Jann out now! Or, at least, give it a star, fork it, or comment on GitHub.