The Cherry Pick Ratio

Table of Contents

If my feeds on LinkedIn and X are to be believed, AI models generate nothing but useful and incredibly high quality outputs. Unlikely to be the case. And, in fact, I don’t actually know how much effort is behind each generated image, video, song, or hilarious joke that is shared. There is something hidden in the way we talk about generative AI.

How hand picked are those outputs? #

What do I mean? Well, there are two things that a generative model is trying to do. One is to learn patterns in all of the training data to create new high-quality outputs. This is the ‘hard’ science of generative AI: improving the model, data, and learning to generate higher quality outputs.

But, we also expect the models to accomplish a second, much more subjective task. It must translate some input from the user (e.g. a text prompt or an image) into an generated output that satisfies the user’s expectations. Sometimes the user knows what they want. Other times they will “know it when they see it”. Either way, the first output is rarely the final one. It could take ten, twenty, thirty generations before the model generates an acceptable output that they like. Or worse, before they get frustrated and give up.

This leads me to a question that is discussed often with peers, but is somewhat lacking in the literature around generative models. How hard are users willing to work to get to a final result?

This question is at the core of the adoption of generative models. For a model to be capable, it needs a foundation of a good model, good data, and good training. But, for the model to be widely adopted, it needs to be more than capable—it needs to feel useful to people. Tangibly, the user needs to feel that the model is worth the investment of time, effort, and money needed to create something useful.

In literature, this quality is described in a few different ways: prompt adherence, or how well do the outputs align to the prompt; steerability, or how well can users direct the behaviour of generative models; and, more generally, as controllability, or how easy it is to get the model to accomplish the users objectives. But, there is no clear definition or measure of the point where users feel a model can do something useful for them.

What if there was?

What is Cherry Picking? #

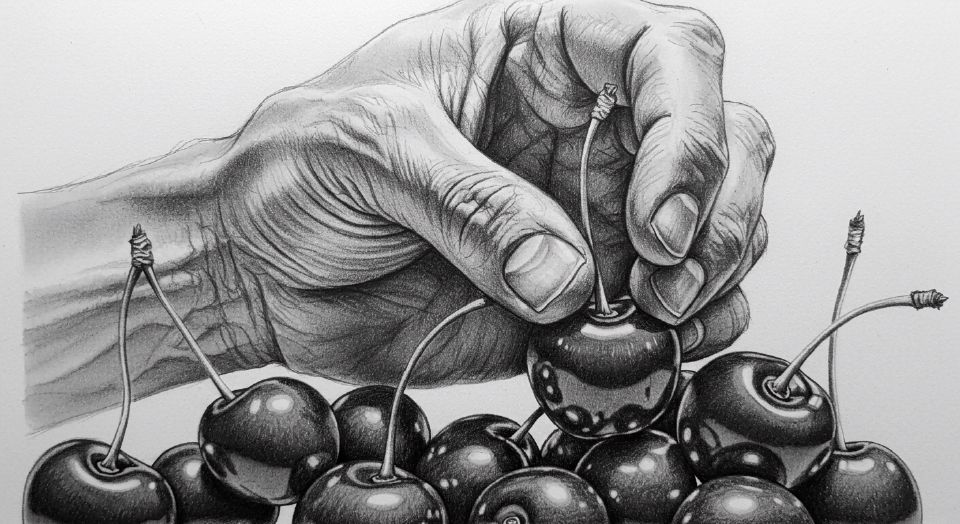

To start, let’s call an output that the user deems useful a “cherry-picked output” (CPO). The outputs we end up seeing are the ones that users have cherry-picked from a large number of samples. Many generated samples are discarded because they do not align to the users expectations, and many are discarded because the model failed in generating outputs of high enough quality.

Either way, users dig around to find a handful of the best outputs in the bunch. They cherry pick and then showcase only the best. So, how much work does it take for us to find these cherry picked outputs?

What is the Cherry Pick Ratio? #

I want to measure what it took to generate a cherry-picked output. This measure correlates with 1) the time it takes the user to iteratively construct and modify their inputs to the model and 2) the time it takes the user to review, rank, and curate from all the generated outputs.

To simplify, I can define the cherry pick ratio as the number of useful outputs divided by the total number of generated outputs generated.

Cherry Pick Ratio = Useful Samples : Total Samples

How about a worked example? Let’s say I am working with a chatbot to write a funny joke (i.e. one that I find funny enough to include in this post). Let’s say I ask the chatbot to generate 5 jokes about cherry picking. And, becuase the first prompt didn’t work so well, let’s say that try to different input prompts. So I have a total of 10 outputs. If it takes ten samples total to generate a single usable output then the model’s CPR is 1:10. If on the other hand, it had come up with a funny joke on the first attempt, then the CPR would be 1:1.

For reference, here’s the best I could get from a total of 10 outputs: “Low-hanging fruit: because success tastes better when you barely try.”

On the all-to-rare occasion that the model is able to come up with multiple useful outputs for a single user input, then the ratio could be something like 2:1 or even 5:1.

Cherry Picking and Tolerance #

Why is the Cherry Pick Ratio useful? Because it gives us a way to quantify the performance of a generative AI model on the harder to codify and more subjective task of generating something useful. It enables us to compare the ‘feel’ of one generative model against another. And finally, because it enables us to discuss interaction with generative AI systems in terms of tolerance. What will the user tolerate in terms of a Cherry Pick Ratio? This measure of tolerance is directly connected to adoption and delight.

A good estimate for my CPR tolerance when generating one-liner jokes for inclusion in a blog post is 1:10. Practically, that means that unless a generative AI model can reliably create a single useful output for every 10 outputs that I am much less likely to use and adopt that model.

My tolerance may be vastly different than someone else’s. In fact, my tolerance varies for different jobs I am trying to do with these generative AI models. As another example, an automated customer service representative for an airline that requires stable, reliable, and secure technology, may need a CPR of 1:1. Every output has to be useful. Compare that with an experimental AI artist exploring and expressing new ideas through the lens of a new model. The artist may tolerate a CPR of 1:100, or 1:1000 as I’ve seen common in many generative AI filmmaking workflows.

A system developer also has a tolerance for CPR. One company may want to release models with a CPR of no more than 1:4 to protect a reputation of quality. Compare that to another company which may be more willing to release models which generate useful outputs less often but offer an increase in generation speed, for instance.

Why not generate lots of outputs for every input from the user? This would ensure that there is a useful output available? Because there is a real and significant cost to the number of outputs generated for each prompt. If the model outputs too many candidates for each prompt, it could take too long to generate, and it can take too long for the user to review the entire candidate set.

On both sides, tolerance tells us something useful. It defines when a new model is ready for adoption. No more guessing. Developers can define a goal number—and confidently release a model when they achieve it.

Testing the theory #

There are a few ways to design experiments around the Cherry Pick Ratio. For example, gather a sample of users and provide them a creative brief. Then measure how many input iterations and how much curation it takes to land on a useful final output.

An average over a sample group could provide a baseline Cherry Pick Ratio. This could then be compared with the CPR of the same group after a training session on, say, how to write better prompts, or compared across different models to understand what works best for them.

We could also take a more subjective and qualitative approach and survey a group of testers after 10, 20, or 30 generations. Are they frustrated? Excited? Ready to give up? Analysis of this sort of survey could map their changing expectations over time and empirically connect tolerance and CPR

Wrapping Up #

Today, lots of the progress in generative AI focuses on the capabilities of our systems. Think: higher resolution, higher fidelity, richer language, faster, and lower cost. That’s useful and necessary work. But, it’s not enough. There is also work to be done to understand and communicate how well models help users do the things they are trying to do.

We need to understand how hard our people are willing to work for an output that satisfies their needs—their tolerance and what an ideal Cherry Pick Ratio might be.

Because if we do, we’ll create models that are not only theoretically capable of doing amazing things, but that actually do them.